Tools for your LLM: A deep dive into MCP

Technology that can turn LLMs into actual agents. This is because the MCP provides tools for your LLM that they can use Live information retrieval or Implementation of procedures On your behalf.

Like all the other tools in the toolbox, I believe that in order to apply MCP effectively, you have to understand it well. So I approached it in my usual way: I put my hand around it, punched it out, took it apart, put it back together, and made it work again.

This week’s goals:

- get Strong understanding of MCP; What is this?

- Building an MCP server And link it to LLM

- Understands When to use Multilateral consultative process

- explores Considerations About MCP

1) What is MCP?

MCP (Model Context Protocol) is a protocol designed to extend the scope of LLM clients. An LLM client is anything that runs the LLM: think Claude, ChatGPT, or your own client LangGraph proxy chatbot. In this article, we will use Claude Desktop as an LLM client and build an MCP server for it to extend its capabilities.

Let’s first understand what MCP really is.

A useful analogy

Think of MCP the same way you think of browser extensions. A browser extension adds capabilities to your browser. MCP Server adds capabilities to your LLM. In both cases, you provide a small program that the client (browser or LLM) can load and communicate with to do more.

This software is called an MCP server and LLM clients can use it to retrieve information or perform actions for example.

When is the software an MCP server?

Any program can become an MCP server as long as it implements the Model Context Protocol. The protocol specifies:

- What functions should the server expose (capabilities)

- How should this functionality be described (tool metadata)

- How can LLM contact them (in JSON request formats)

- How the server should respond (in JSON result formats)

An MCP server is any program that follows MCP message rules. Note that language, runtime or location does not matter.

Main capabilities:

- Declaration tools

- Accept the tool call request

- Execute the required function

- Returns a result or error

Example of a tool call message:

{

"method": "tools/call",

"params": {

"name": "get_weather",

"arguments": {"city": "Groningen"}

}

}Sending JSON this means: “Calling get_weather function with arguments city=’Groningen’.”

2) Create an MCP server

Since any program can be an MCP server, let’s create one.

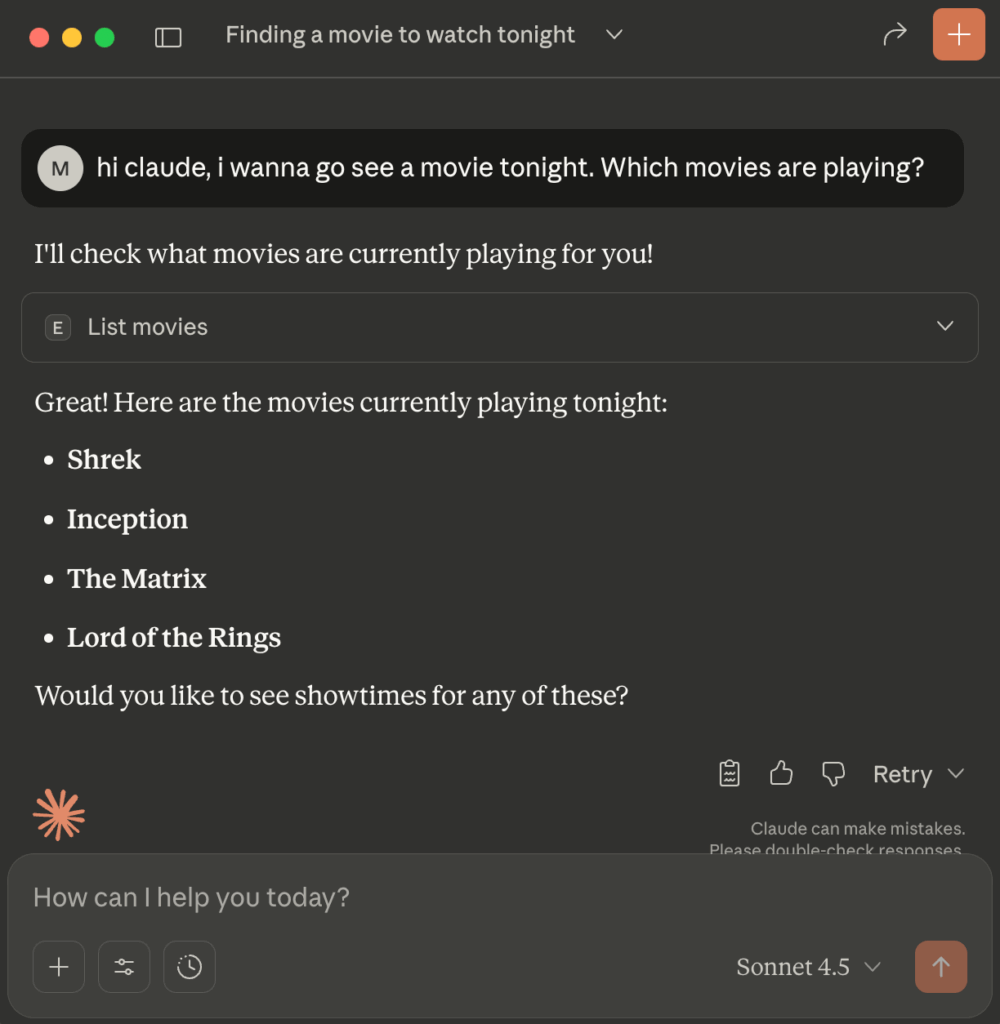

Imagine we work in a movie theater and we want to make it possible for agents to help people buy tickets. In this way a The user can select the movie of his choice by chatting with ChatGPT or directing Claude to buy tickets.

Of course, LLM holders don’t know what’s happening in our cinema, so we’ll need to expose our cinema API through the MCP so LLM holders can interact with it.

The simplest MCP server possible

We will use fastmcpa Python package that encapsulates Python functions so that they conform to the MCP specification. We can “present” this code to the LLM so that they are aware of the functionality and can call it.

from fastmcp import FastMCP

mcp = FastMCP("example_server")

@mcp.tool

def list_movies() -> str:

""" List the movies that are currently playing """

# Simulate a GET request to our /movies endpoint

return ("Shrek", "Inception", "The Matrix", "Lord of the Rings")

if __name__ == "__main__":

mcp.run()The above code identifies the server and registers the tool. The docstring hints and type fastmcp help describe the tool to the LLM client (as required by MCProtocol). The agent decides based on this description whether the job is suitable to accomplish the task he has been hired to do.

Connecting Claude Desktop to the MCP server

In order for our LLM program to be “aware” of the MCP server, we have to tell it where to find the program. We register our new server in Claude Desktop by opening Settings -> Developer And modernization claude_desktop_config.json So it looks like this:

{

"mcpServers": {

"cinema_server": {

"command": "/Users/mikehuls/explore_mcp/.venv/bin/python",

"args": (

"/Users/mikehuls/explore_mcp/cinema_mcp.py"

)

}

}

}Now that our MCP server is registered, Claude can use it. It’s an invitation list_movies() For example. The functions in registered MCP servers become first-class tools that an LLM can decide to use.

As you can see, Claude executed the function from our MCP server and has access to the resulting value. Very easy in just a few lines of code.

With a few extra lines, we wrap more API endpoints in our MCP server and allow LLM to call functions that return scan times and even allow LLM to perform actions on our behalf by making a reservation:

Note that although the examples are deliberately simplified, the principle remains the same: we allow our LLM software to retrieve the information and act on our behalf, through the Cinema API.

3) When to use MCP

MCP is ideal when:

- You want to access LLM Live data

- You want an LLM? Implementation of procedures (Creating tasks, fetching files, writing logs)

- Do you want? Expose internal systems In a controlled manner

- Do you want? Share your tools With others as a package they can connect to their MBA (LLM).

Users benefit because MCP allows LLM to become a more powerful assistant.

Service providers benefit because MCP allows them to expose their systems securely and consistently.

A common pattern is a “tool kit” that exposes back-end APIs. Instead of clicking through UI screens, the user can ask an assistant to handle the workflow for them.

4) Considerations

Since its release in November 2024, MCP has been widely adopted and has quickly become the default way to connect AI agents to external systems. But it is not without trade-offs. MCP introduces real structural burdens and security risks that, in my opinion, engineers should be aware of before using them in production.

A) Security

If you download an unknown MCP server and connect it to an LLM, you are effectively granting that server file network access, access to local credentials, and command execution permissions. The malicious tool can:

- Read or delete files

- private data filtering (

.sshkeys for example) - Scan your network

- Modifying production systems

- Stealing codes and keys

MCP is only saved as the server you choose to trust. Without guardrails, you give LLM complete control over your computer. It makes it very easy to overexpose as you can easily add artifacts.

The browser extension analogy applies here too: most are safe but malware can cause real harm. Like browser extensions, use trusted sources such as verified repositories, examine the source code if possible, and implement a sandbox when you’re not sure. Enforce strict permissions and rental concession policies.

b) Context window amplification, token inefficiency and latency

MCP servers describe each tool in detail: names, argument schemas, descriptions, and result formats. The LLM client loads all this metadata upfront into the model context so it knows what tools exist and how to use them.

This means that if your agent uses many complex tools or schemes, the claim can grow significantly. Not only does this use a lot of tokens, but it also uses up the remaining space for chat history and instructions for the quest. Every widget you expose constantly eats up a slice of the available context.

In addition, each call to the tool provides a logical load, schema analysis, context reset, and a complete round trip from the model -> MCP client -> MCP server -> back to the model. This is far Too heavy for latency-sensitive pipelines.

C) Complexity is transferred to the model

The LLM must make all the difficult decisions:

- Whether the tool will be called at all

- Any tool for communication

- What arguments should be used

All of this happens within the model logic and not through explicit formatting logic. Although this initially seems convenient and magically effective, on a large scale it can become unpredictable, difficult to debug, and difficult to guarantee determinism.

conclusion

MCP is simple and powerful at the same time. It is a standardized method to allow LLM holders to call real programs. Once the software has implemented MCP, any compatible LLM client can use it as an extension. This opens the door to assistants that can query application programming interfaces (APIs), perform tasks and interact with real systems in a structured way.

But with great power comes great responsibility. Treat MCP servers with the same caution as you would with software that has full access to your device. Its design also introduces impacts on token usage, latency, and LLM compression. These trade-offs may undermine the core benefit for which MCP is known: turning agents into effective real-world tools.

When used intentionally and safely, MCP provides a clean foundation for building agentic assistants that can actually work He does things instead of just talking about them.

I hope this article is as clear as I intended it to be, but if not, please let me know what I can do to clarify further. In the meantime, check out my Other articles On all kinds of programming related topics.

Happy coding!

— Mike

PS: Like what I do? Follow me!

(Tags for translation)Agent Ai